The Traditional Levels of Data Modeling

A High-Level Look at Conceptual, Logical, and Physical Data Modeling

Here’s a high-level view of the traditional levels of data modeling - conceptual, logical, and physical. I’ve tried to keep the discussion focused on the what and why, versus diving straight into showing you how to build a data model.

The following sections will cover how to implement these levels with Entity-Relationship modeling, extending these levels to unstructured data, and much more.

As always, comment where you wish. If you find errors, please submit them here.

Thanks,

Joe

Struggling to find value in enterprise data modeling? Ellie.ai is an AI-powered data modeling tool that enables you to design & build data products 10x faster.

Learn More About Ellie's Data Modeling Practices

Thanks to Ellie.ai for sponsoring this post

The traditional levels of data modeling are conceptual, logical, and physical. As mentioned above, these flow linearly from high-level conceptualization to physical implementation. When I’ve seen how these levels are taught elsewhere, most resources tend to get deep into the implementation details out of the gate. I find it hard for readers to learn what these levels are and why they exist. Here, we’ll first look at these levels in a generic sense and why they’re the heart and soul of data modeling. Then, we’ll provide an example of building each level using the entity-relationship approach.

Conceptual Data Modeling

Conceptual data modeling (CDM) is the initial and highest level of data modeling. At this stage, your goal is to capture the big picture - what are the fundamental concepts you’re modeling and how do they relate to each other? Your job is to listen to “The Business” - domain experts and people in the trenches who understand how things work. You’ll learn their “language of the business” and identify the core concepts necessary to model a domain, subject area, or business process.

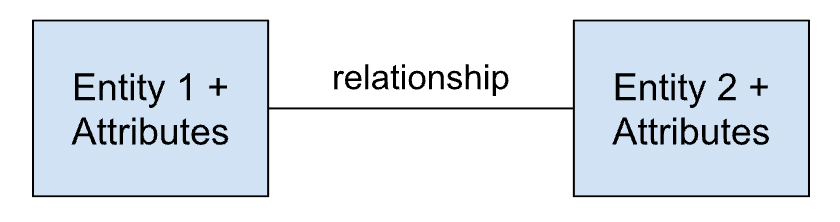

What you’re NOT doing yet is worrying about specific implementation details of the data model. No database tables or data types. That comes later. For now, your focus is on clarity, structure, and shared understanding at the highest level of abstraction. Generically, you’re identifying entities and how they relate to each other.

The CDM is a communication tool. My friend and conceptual data modeling expert John Giles calls it a “town plan.” Earlier, I referenced designing and building a house. Now, expand this to building a town. While you could design a town in an ad hoc way (and many older cities resemble “accidental” design over the centuries), you can choose to be intentional in your efforts. A town plan brings together different ideas for the future - what do you want your community to be? In much the same way, Giles recommends building a Data Town Plan, which he describes this way “For a city, or a Data Town Plan, you start top-down with the big picture. Too many Enterprise Data Model initiatives get buried in costly and unnecessary detail. Instead, embrace the helicopter-views of the business leaders and leave technical details to IT professionals who actually need nuts and bolts particulars.”1 Here, you’re trying to understand what is and what could be. The Data Town Plan is a CDM that brings the business and technical stakeholders together on a shared vision for how the business operates and how that’s translated into data. This last point must be reiterated - the CDM helps the business understand the data model. Without the business feeling a level of involvement and understanding of the data model, the results of your data model will not have nearly as much impact. At some point, the business will ask, “What does this data mean?” or even worse, “This data is wrong!” So, bring the business along for the ride in your early data modeling efforts, using the CDM as the basis of shared understanding.

Using whatever sensory tools are available to you, stay alert. Read the documentation and anything else that will help you better understand what you’re trying to model. If you’re meeting with people or looking at a workflow, observe what’s happening around you and what people are talking about. Notice what people say, especially if certain words or phrases keep recurring. Common and shared vocabulary will help you hone in on essential business concepts.

Designing a CDM should be as low-tech as possible at the start. A pen and one page of paper, or a whiteboard and some markers, will work fine. Sketch how different concepts relate to each other. Do this while talking with stakeholders and ask them if what you’re drawing makes sense to them. And remember, drawing is about promoting conversation and questions. My friend Larry Burns says, “The most important thing a data modeler can do is ask questions. Data modeling is not about drawing, it’s about questioning.”2 When it comes to recording this information, leverage technology in a way that keeps the discussions as free-flowing and frictionless as possible. Record the discussions (video or audio) and use voice transcription if you want a written version. These conversations are about “learning to see” the business and how processes and information flow and relate. As Burns further says, “The data model itself is not as important as the conversations the data model promotes.” The heart of conceptual modeling is discovering the things necessary to the business and how these things relate to each other.

Logical Data Modeling

If the conceptual data model (CDM) is a town plan—an abstract view of how everything fits together, then the logical data model (LDM) is where we start sketching the blueprints for the actual buildings, roads, etc. The LDM is an intermediate step between conceptual and physical data modeling. You might model entities, relationships, and attributes using the relational model or a Star Schema for an analytical model. The choice of modeling technique depends on your use case.

Like the CDM, an LDM is technology agnostic. You’re not yet choosing storage engines or optimizing queries. Instead, you’re taking the ideas from the CDM and shaping them into something a data system could implement, without worrying about how it will be implemented.

The big difference between CDM and LDM design is the level of detail. LDM aims to give your CDM a design that will be translated into a physical manifestation in a database or other data system. The logical model is more detailed than the conceptual model. Here, you might define the attributes (columns) for each entity (table), or the relationships of entities (one-to-many, many-to-many, etc.). If you’re relationally modeling, you’ll normalize your data at the logical layer. Again, no physical optimizations are done, as those are reserved for the physical data modeling step.

It’s worth noting that logical models can take many forms. We mentioned normalization for relational data. If you’re building for analytics, your logical model might be a star schema. If you’re designing for a document database, your model might favor embedded relationships. The point of logical modeling isn’t to force everything into tables, but to give structure and shape to the domain in a way that matches its use.

Some people skip logical data modeling and jump straight to implementing vague ideas into a physical schema design. Don’t do this, as you’ll inevitably jump back to logical modeling when you’re reworking your crappy physical implementation. On the opposite end, it’s possible to over-optimize your logical design, say, over-normalizing. While normalization is a tool, it’s not a religion. Balance the avoidance of data redundancy against breaking your data apart more than your use case demands. Finally, the design should be “just in time.” Don’t over-model your data to anticipate every hypothetical future scenario. Instead, design for today’s realities and evolve as needed. This is where the discussions and collaborations in your conceptual model will be critical to building a useful logical model. As with the conceptual model, the logical model is most powerful when used as a collaborative thinking tool. Use it to validate your understanding, align your team, and prepare for the technical constraints and trade-offs you’ll face at the physical layer.

Physical Data Modeling

“Decide what data you want first, then decide how to best represent it in storage. Physical design should always be done after logical design” - Chris Date

You have your town blueprint (LDM), and now it’s time to build. The physical data model (PDM) translates abstract relationships and normalized tables into actual database objects: tables, columns, indexes, constraints, storage formats, and more.

When moving from LDM to PDM, you’re now tying your design to a specific technology or platform. You’ll specify things like:

Specific data types (VARCHAR, DATE, FLOAT, etc.)

Indexes (primary, secondary, composite)

Partitioning strategies

Constraints (uniqueness, NOT NULL, referential integrity)

Tablespaces, storage formats (e.g., columnar vs. row)

For example, your logical model may define a Customer.Email attribute. In the physical model, you’ll decide whether that attribute should be VARCHAR(255), how it’s indexed, whether it’s nullable, and what constraints should be applied.

The decisions you make here are influenced (sometimes heavily) by the capabilities and limitations of your data platform. If you’re using a relational database like Postgres, you’ll want to focus on building normalized tables with indexes where appropriate. On the other hand, if you’re implementing your model in an analytical database like Snowflake, you’ll need to evaluate how column reads/writes, separation of compute and storage, and micropartitions impact your logical design. Are you better off using a Star Schema, one big table, or Data Vault? This is where your logical and physical design will need further evaluation against the technical capabilities and limitations of your chosen technology or platform.

At the physical level, you’re thinking about things like query, ingestion, and storage performance. Which queries matter the most? Which fields need indexes, sort keys, or clustering? How will you load data into your model? Is it efficient and cost-effective? Finally, look at security and governance. What data is visible to whom? Again, this is where the conversations with the stakeholders in the conceptual modeling exercises come back. What do the stakeholders expect regarding the service level agreement (SLA)? While the data model needs to meet the conceptual requirements, it also needs to meet user requirements in terms of usability. The physical model is closest to the metal and often most influenced by non-functional requirements.

A good physical model is faithful to the logical model but shaped to meet the realities of your technology and use case. If the conceptual model is the vision, and the logical model is the blueprint, then the physical model is the infrastructure: concrete, wood, steel, PVC pipe, wires, etc.

Let’s now look at an example of creating a data model, from conceptual to logical to physical implementation. We’ll use the very popular entity-relationship model to guide this exercise.

The Data Elephant in the Board Room, page 7

Data Model Storytelling, page 15

Designing any system should start with the bird’s-eye view (the what), followed by the tech-agnostic how, and finally the tech-informed how. Skipping to the last step, or doing a sloppy version of the second, is fine for a POC, but has no place in real-world production.

It still baffles me that systems are built this way. It’s like jumping straight into code without knowing what you're coding.

Maybe we should call this whole modeling style vibe modeling?

(+ 2 small typos submitted via the form.)

Levels of data modeling help organize data into conceptual domains or functional areas, which hopefully leads to business domain ownership. As well has understanding the dependency of entities across domains.